Hello David,

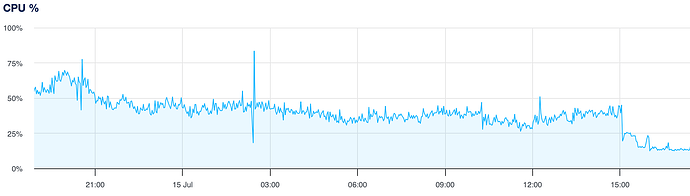

The challenge is matching the > 20 million spots per day (without FT4/FT8 DXCC filtering) against the 100k+ triggers (with even more conditions) currently defined, without requiring a big expensive cluster with lots of CPU cores. Currently HamAlert runs on 16 dedicated cores, which is enough for the filtered spots, but not for the full feed.

Obviously a naive linear search of all triggers/conditions for each spot is long out of the question, and direct queries against MongoDB, where the triggers are stored, are also inefficient due to the query complexity, even with lots of indexes.

Therefore, the HamAlert backend uses a scheme that involves translating all the trigger definitions into hash tables, one for each spot attribute (e.g. band, mode, DXCC etc.). The keys of the hash tables are the possible attribute values, like ‘10m’, ‘20m’, or ‘cw’, ‘ssb’, or DXCC numbers), and the values are a list of trigger IDs (integers, in ascending order) that match this particular attribute value. So if somebody defined a trigger with a band condition for 40m, 20m and 10m, then that trigger ID would appear in the value lists of the band hash table under the keys ‘40m’, ‘20m’ and ‘10m’. If a trigger doesn’t have a particular condition, then it is included under a special ‘null’ key in that hash table, which is always appended to the list obtained by looking up the actual spot attribute value.

In this way, the backend only has to do one hash table lookup for each spot attribute, resulting in about 30 long lists of trigger IDs. On those, it performs set intersection, i.e. finding trigger IDs that appear in all the lists. This is made much more efficient due to the fact that the trigger IDs are already sorted, and “galloping lookahead” using binary search can be used.

The result is a list of matching trigger IDs, which can then be fetched to send the notifications to the corresponding users.

This is a simplified description; the reality is a bit more complex due to things like ‘not’ conditions or time ranges. And rate limiting notifications also adds more processing expense. But you get the general idea. It also shows why HamAlert does not like it when users define overly general triggers (like with only a ‘20m’ band condition), as those generate many thousand matches per day, only to be weeded out afterwards by the rate limiting. Such mostly useless triggers are automatically disabled to reduce the load.

If you have an entirely different idea on how to do trigger matching, I’m all ears. My current plan, as already outlined in the other post, is to rewrite the matching engine in Rust with the same basic algorithm, to hopefully gain enough performance over the current Node.js implementation to warrant turning off the DXCC filtering again. The basic problem of FT8 and digimode spots in general remains though: the number of spots tends to increase with the square of the number of users, as almost every user is also a spotter.

73,

Manuel HB9DQM